We want to minimize a convex, continuous, and differentiable cost function . In this blog post we discuss the most popular algorithm, gradient descent, using linear regression, and build it from scratch in Python. A few highlights:

- Code for linear regression and gradient descent is generalized to work with a model for any .

- Gradient descent is implemented using an object-oriented approach.

- Impact of the learning rate on convergence (divergence) is illustrated.

Throughout this post I use a bold font for vectors (e.g. , ) and a regular font for scalars and functions (e.g. , ).

Theoretical idea of the gradient descent

Taylor expansion

Simplify the function you would like to minimize by using the first-order Taylor polynomial. Provided that the norm is small (i.e. is very close to ), we can linearly approximate the function by its first derivative:

where is the gradient of . This approximation is valid only when the step size is small. We will return to this in the learning rate discussion.

Note: We may also use the second-order Taylor polynomial to make a quadratic approximation of the function. Examples are the Newton and Gauss-Newton methods. Second-order optimization methods consume more computational power and are less popular for machine learning model training.

Gradient

The gradient vector

gives the direction of steepest ascent on the surface of the function , and the rate of change in this direction is . 1

Convergence

Consequently, points in the direction of the steepest descent. Setting for a sufficiently small guarantees to decrease the function2:

So the iterations of steepest descent are:

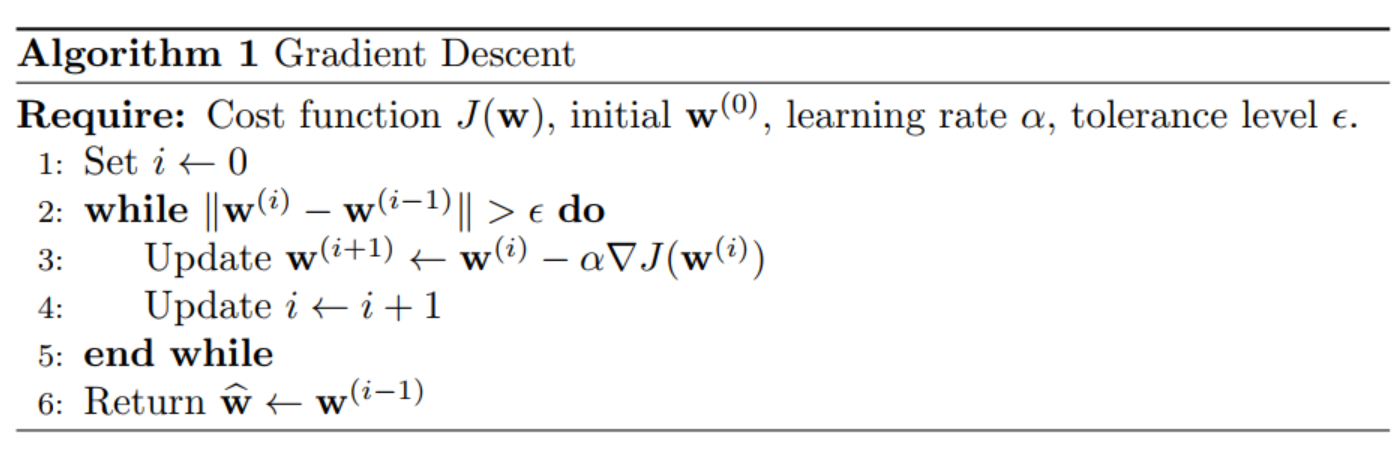

Algorithm

We will implement this algorithm with linear regression using the squared loss. Let’s now define the linear regression and optimization problems.

Linear regression

Model

The linear regression model assumes that the numerical output variable can be described as an affine combination of the features plus a noise term ,

The coefficients are called parameters, weights or coefficients of the model.

Prepend a feature vector with a constant 1

to express the linear regression model compactly as

The predicted output variable for some feature vector using learned parameters is obtained with

The parameters are learned by minimizing the cost function ,

We solve this optimization problem with the gradient descent.

Cost function and its gradient

The squared error loss

is a common loss function used for measuring the closeness of a prediction and the training data. The cost function is the average of the loss function evaluated on the training data:

where is the number of samples in the training data. It is a scalar-valued function. The quadratic nature of the loss function produces a convex cost function.

If then

and the gradient using the chain rule of calculus is

Note: When I first encountered the gradient descent I could not understand how the gradient could be calculated from discrete data points. “Derivatives require continuity, but I am given just a few data points”, I thought. But this is the wrong way to think about the gradient. The gradient is calculated using a given function (in our case, ) and has nothing to do with the actual data at this stage. Notice how we calculated the gradient above and we did not care about the data points yet. Data points are used to evaluate the gradient at a specific point (in our case, at in each iteration of the gradient descent algorithm).

Implementation in Python

| import numpy as np | |

| class GradientDescentLinearRegression: | |

| """ | |

| Linear Regression with gradient-based optimization. | |

| Parameters | |

| ---------- | |

| learning_rate : float | |

| Learning rate for the gradient descent algorithm. | |

| max_iterations : int | |

| Maximum number of iteration for the gradient descent algorithm. | |

| eps : float | |

| Tolerance level for the Euclidean norm between model parameters in two | |

| consequitive iterations. The algorithm is stopped when the norm becomes | |

| less than the tolerance level. | |

| """ | |

| def __init__(self, learning_rate=0.01, max_iterations=100000, eps=1e-6): | |

| self.learning_rate = learning_rate | |

| self.max_iterations = max_iterations | |

| self.eps = eps | |

| def predict(self, X): | |

| """Returns predictions array of shape [n_samples,1]""" | |

| return np.dot(X, self.w.T) | |

| def cost(self, X, y): | |

| """Returns the value of the cost function as a scalar real number""" | |

| y_pred = self.predict(X) | |

| loss = (y - y_pred)**2 | |

| return np.mean(loss) | |

| def grad(self, X, y): | |

| """Returns the gradient vector""" | |

| y_pred = self.predict(X) | |

| d_intercept = -2*sum(y - y_pred) # dJ/d w_0. | |

| d_x = -2*sum(X[:,1:] * (y - y_pred).reshape(-1,1)) # dJ/d w_i. | |

| g = np.append(np.array(d_intercept), d_x) # Gradient. | |

| return g / X.shape[0] # Average over training samples. | |

| def fit(self, X, y, method = "standard", verbose = True): | |

| """ | |

| Fit linear model with gradient descent. | |

| Parameters | |

| ---------- | |

| X : numpy array or sparse matrix of shape [n_samples,n_predictors] | |

| Training data | |

| y : numpy array of shape [n_samples,1] | |

| Target values. | |

| method : string | |

| Defines the variant of gradient descent to use. | |

| Possible values: "standard". | |

| verbose: boolean | |

| If True, print the gradient, parameters and the cost function | |

| for each iteration. | |

| Returns | |

| ------- | |

| self : returns an instance of self. | |

| """ | |

| self.w = np.zeros(X.shape[1]) # Initialization of params. | |

| w_hist = [self.w] # History of params. | |

| cost_hist = [self.cost(X, y)] # History of cost. | |

| for iter in range(self.max_iterations): | |

| g = self.grad(X, y) # Calculate the gradient. | |

| if method == "standard": | |

| step = self.learning_rate * g # Calculate standard gradient step. | |

| else: | |

| raise ValueError("Method not supported.") | |

| self.w = self.w - step # Update parameters. | |

| w_hist.append(self.w) # Save to history. | |

| J = self.cost(X, y) # Calculate the cost. | |

| cost_hist.append(J) # Save to history. | |

| if verbose: | |

| print(f"Iter: {iter}, gradient: {g}, params: {self.w}, cost: {J}") | |

| # Stop if update is small enough. | |

| if np.linalg.norm(w_hist[-1] - w_hist[-2]) < self.eps: | |

| break | |

| # Final updates before finishing. | |

| self.iterations = iter + 1 # Due to zero-based indexing. | |

| self.w_hist = w_hist | |

| self.cost_hist = cost_hist | |

| self.method = method | |

| return self |

grad

Returns the gradient vector. d_intercept is a partial derivative of the cost function w.r.t. the intercept . d_x is a partial derivative of the cost function w.r.t. to feature coefficients ().

fit

Line 63 initializes parameters as a zero vector. Initialization can be done arbitrarily here because we are dealing with a convex loss function. For convex problems there is only one stationary point, which is also the global minimum.

Note: Sometimes we can save computation time by warm-starting the optimization procedure with a good initial guess. For example, iterations of a -fold cross-validation may use parameters corresponding to a minimum loss in the previous iterations.

Lines 67-85 implement the gradient descent algorithm. Line 69 calculates the gradient, line 74 updates the parameters, line 77 calculates the value of the cost function. An early stoppage occurs when the Euclidean norm of the difference in parameters in the last two iterations becomes less than the tolerance level.

Learning rate and convergence

“Setting the learning rate is a dark art. Only if it is sufficiently small will gradient descent converge”3. Below we take a look at how a choice of impacts convergence.

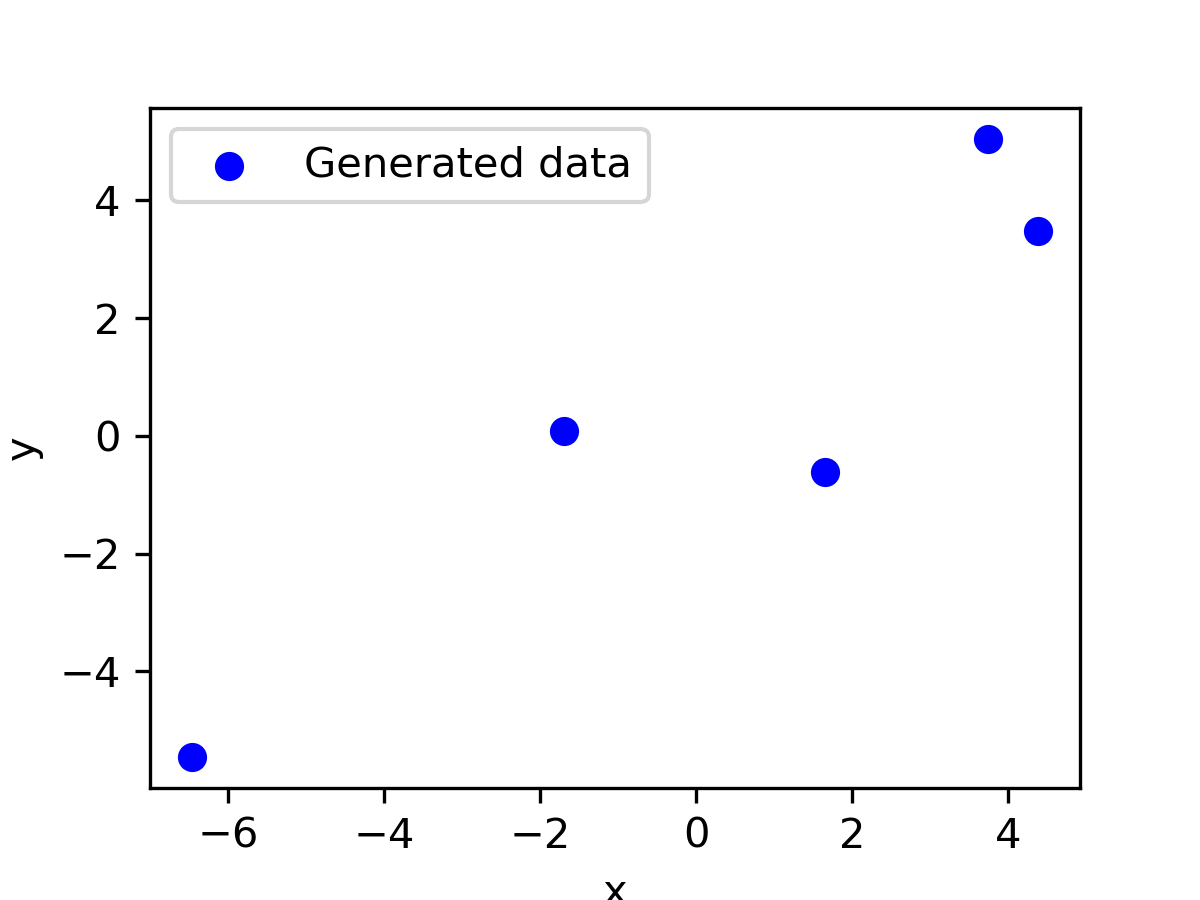

Let’s generate and data for the linear regression and use gradient descent to fit a straight line. Full code is available at my GitHub repository. Generated data looks as follows:

We fit the line using the gradient descent with GradientDescentLinearRegression().fit(X, y, "standard"). The last argument defines a variant of gradient descent to use and standard means the standard gradient descent. We will be adding more methods in the following blog posts.

Slow convergence

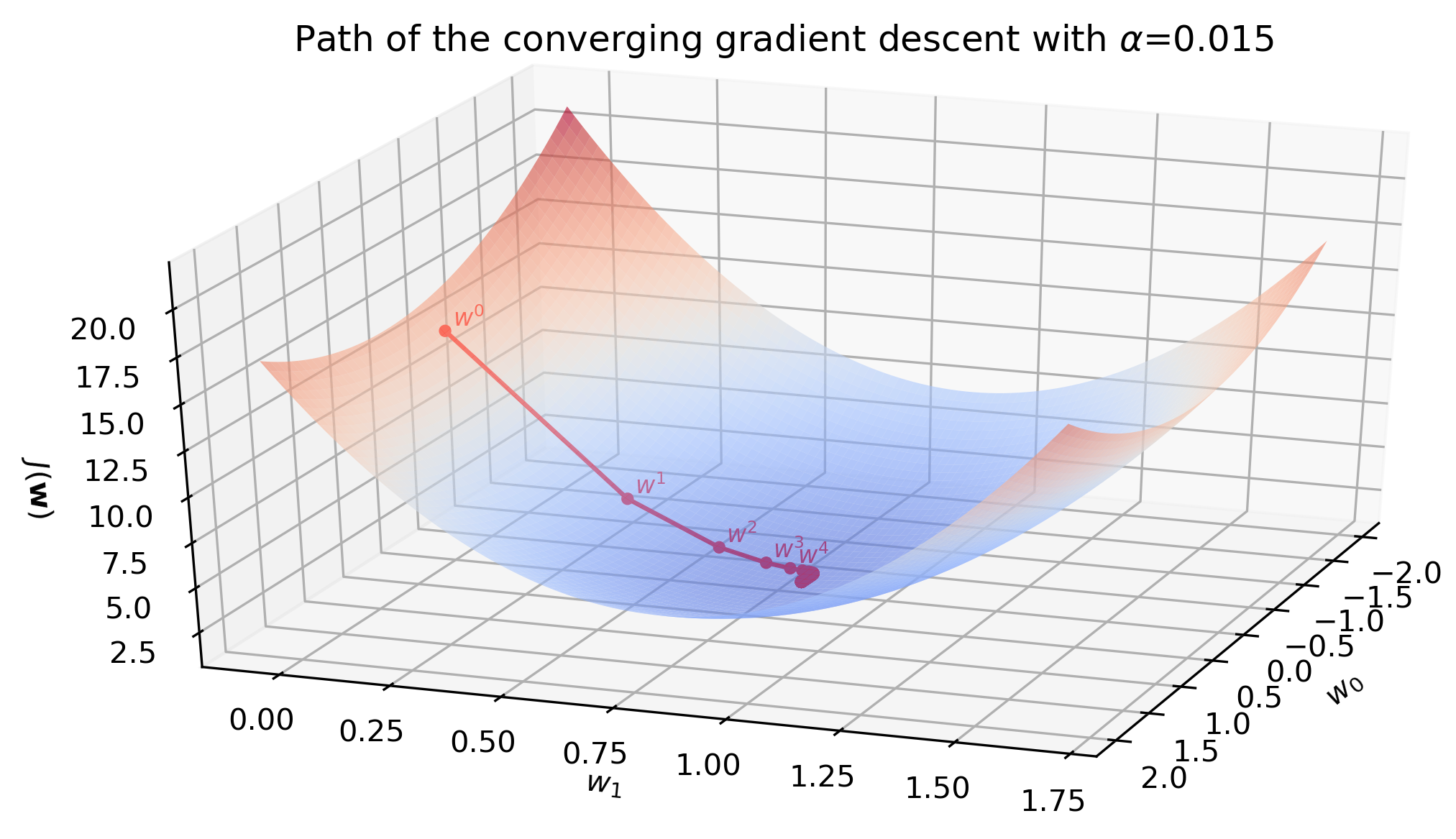

With learning rate the algorithm slowly converges in 3058 iterations. The surface plot below is the surface of the cost function . Red dots are updated parameters from initial to optimal .

The animation below shows how the line is slowly fitted though the first 50 iterations.

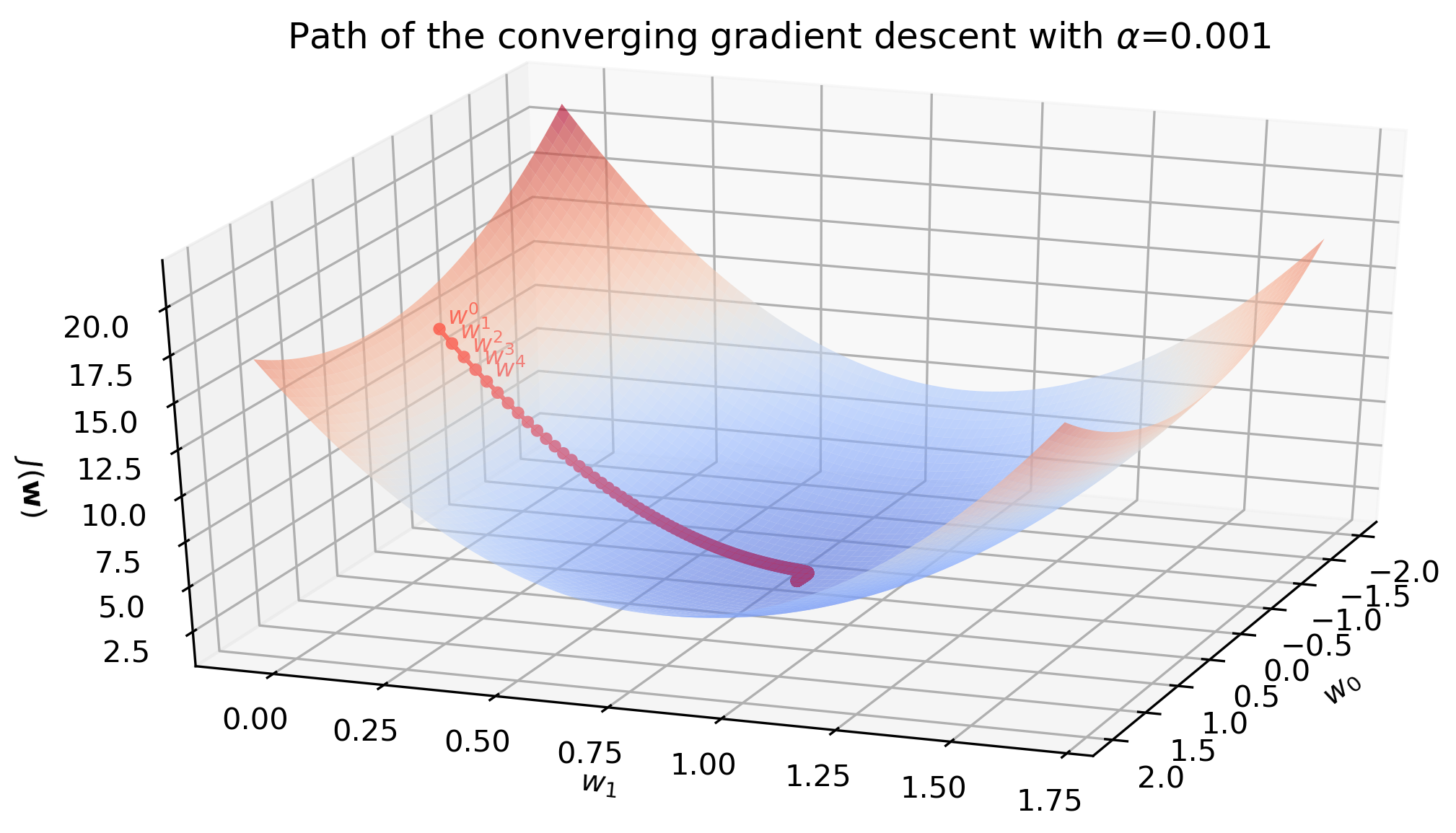

Good convergence

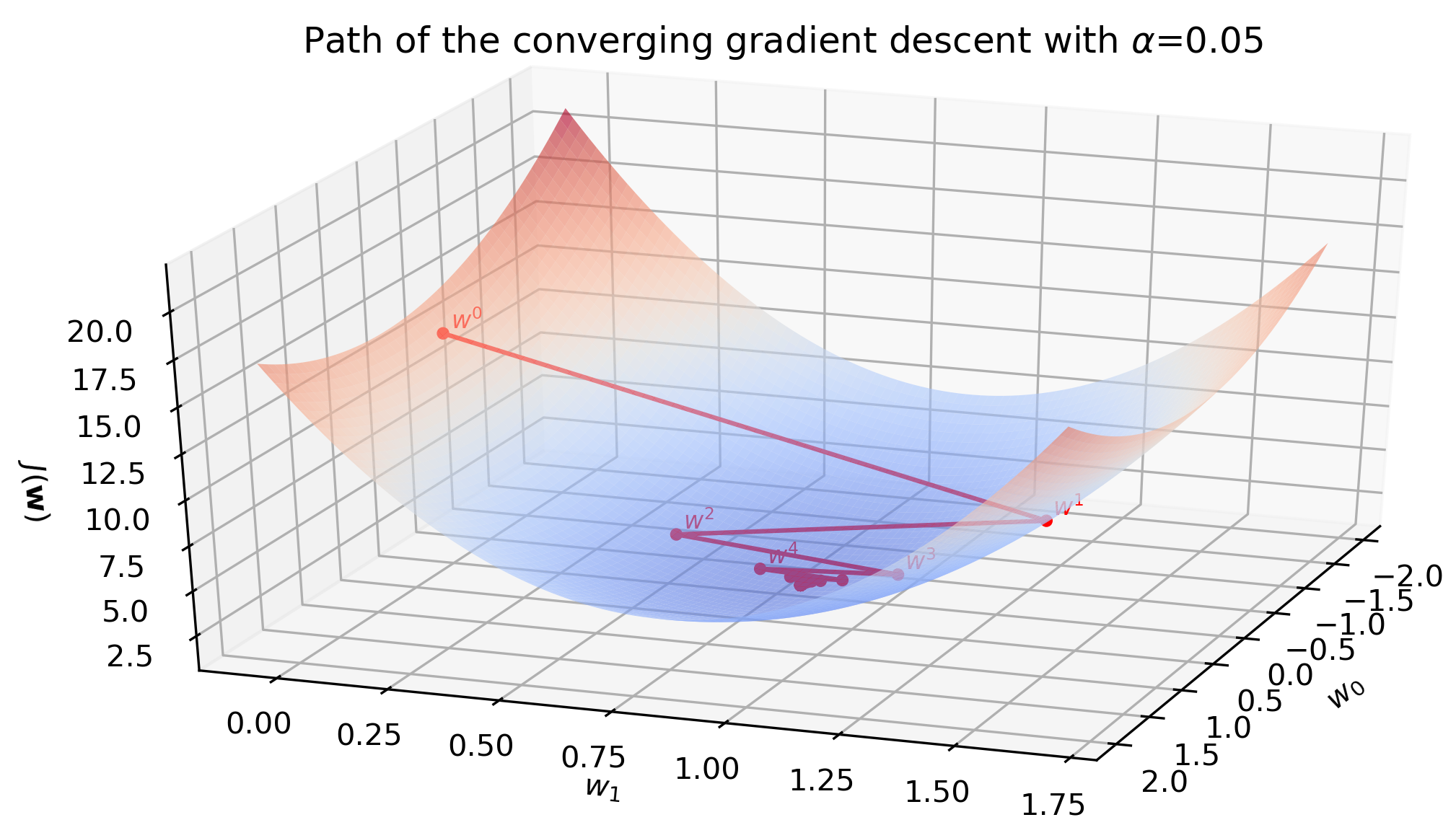

Learning rate seems to be a better guess and the algorithm converges in 292 iterations. After the first five steps the parameters are fairly close to the optimal solution.

The animation below illustrates the last statement - after the first five iterations the line barely moves.

Jumps

When the learning rate is large (but not too large yet), convergence happens in jumps. The change in is large enough to take the updated parameters to the other side of the surface, surpassing the minimum point. With learning rate the convergence shows this jumping behavior. Nevertheless, the algorithm converges because the magnitude of the gradient becomes smaller after each iteration. Convergence takes 97 iterations.

This surface plot also reveals that the gradient descent algorithm does not really slide down the surface. In fact it is a step-wise movement:

- Start at , which is .

- After the first update of the parameters, imagine the red dot moving in the plane to , which is .

- Then the cost is recalculated using and the red dot descends to , which is . But it is not guaranteed to descend. If the step size in plane was too large, the red dot would actually ascend and the algorithm would diverge.

The aimation below illustrates the jumping behavior. With time, oscillations become smaller and eventually the algorithm converges.

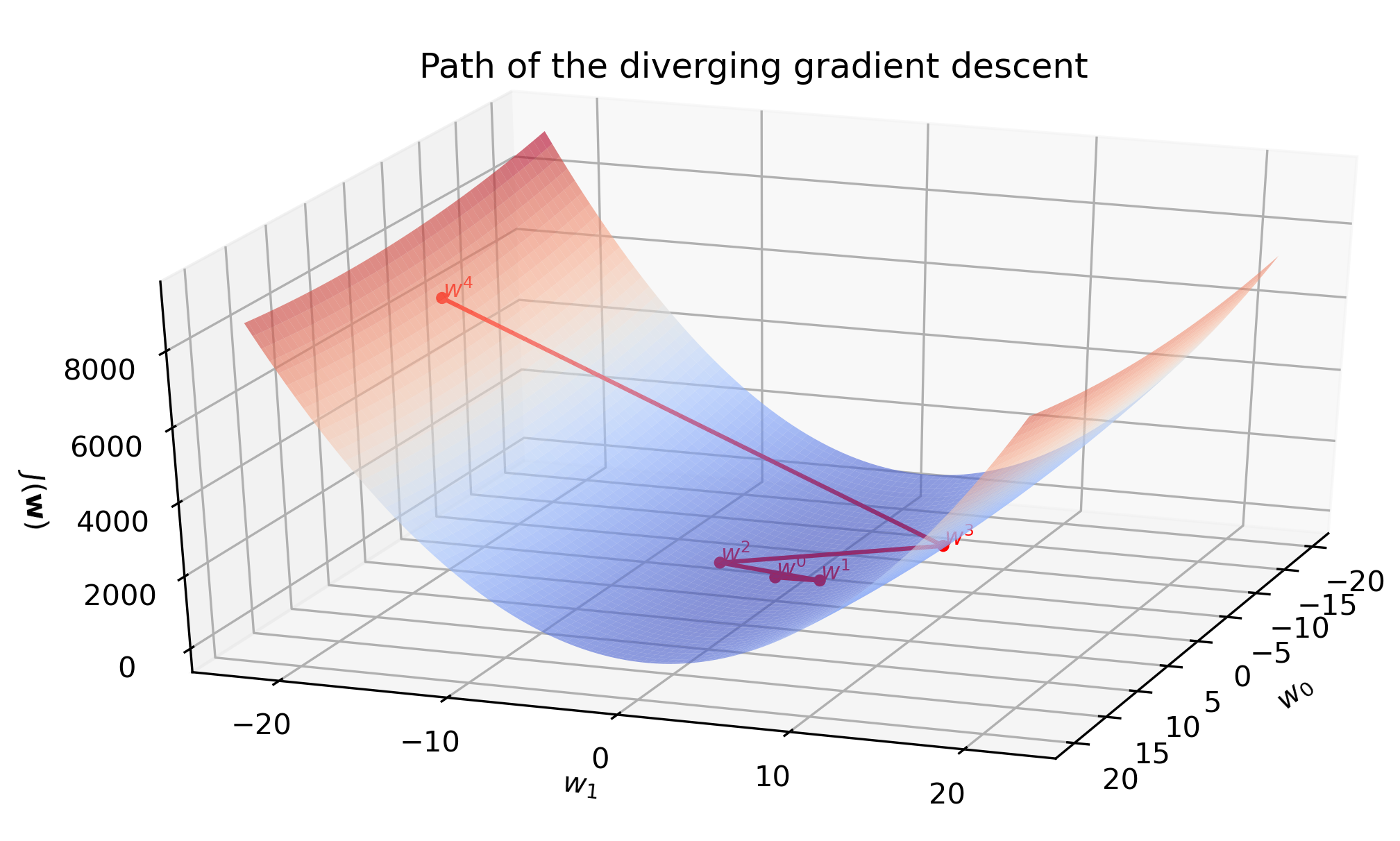

Divergence

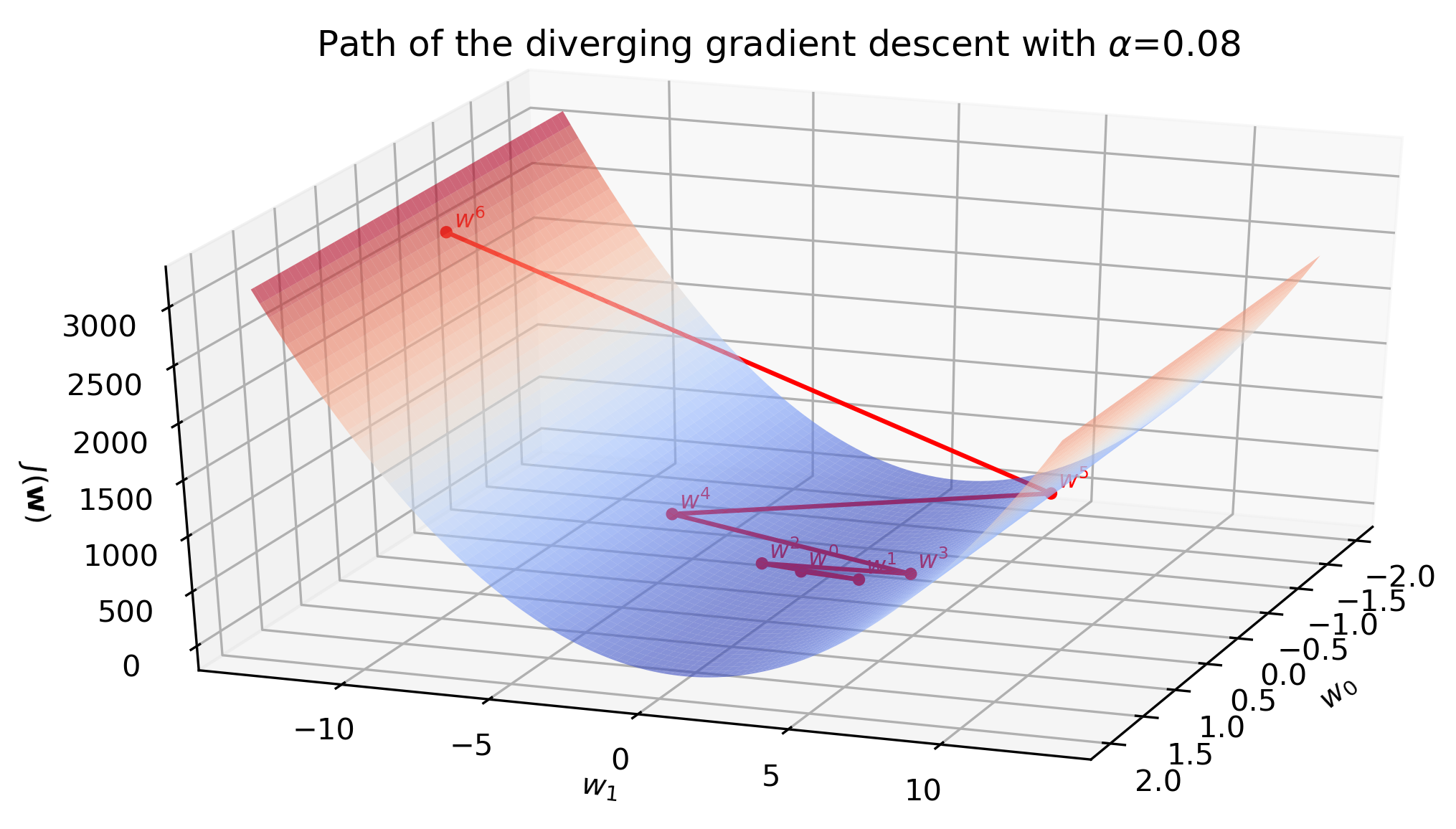

Learning rate turns out to be too high. The magnitude of the gradient grows after the first iteration, which increases the step size for the next iteration. In this fashion, parameters spiral away from the minimum very rapidly - compare the scale of the axis on the previous plot and the one below with only seven iterations.

The fitted line barely moves during the first few iterations due to a huge scale of the plot. However, later we see that the line starts to swing wildly and quickly approaches a vertical where the cost becomes infinitely large.

We would like to get rid of the dark art of guessing learning rates. A safe (but sometimes slow) choice is to set , where is any initial positive value and is the iteration counter. This guarantees that it will eventually become small enough to converge4. With the same generated data and the algorithm converged in 2384 iterations.

In the next post we will look at the adaptive gradient algorithm AdaGrad, which performs more informative gradient-based learning by adapting the learning rate for each feature individually and does not require guessing .

I would appreciate any comments or suggestions. Please leave them below, no login required if you check “I’d rather post as a guest”.